MariaDB & K8s: Communication between containers/Deployments

In the previous blog, a background Deployment resource was created from a YAML file, consisting of a single container (MariaDB) that acts as a background container.

In this blog we are going to proceed to create the frontend container to communicate with the backend through a Service and other resources.

About Services

When an application is run through the Deployment Pods are created and destroyed dynamically. When created, they get the internal IP address in a cluster, and since they are ephemeral, there needs to be a stable way to allow communications between Pods. The Service is used for that. When created, K8s assigns cluster IP to the Service name.

A Service can be created by exposing the Deployment resource using the kubectl expose command. Let’s expose the deployment as a Service on port 3306. This will create a Service type ClusterIP (default one) that is reachable inside the cluster. In case the Service needs to be reachable out of cluster, one can use the NodePort type (more about Service Types). The name of a service will be the same as the name of the resource.

$ kubectl expose deployment/mariadb-deployment --port 3306

service/mariadb-deployment exposedIn order to check for the Service, run kubectl get service mariadb-deployment.

$ kubectl get svc mariadb-deployment

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mariadb-deployment ClusterIP 10.103.111.200 <none> 3306/TCP 76s

To get more information about the service, run kubectl describe service command

Name: mariadb-deployment

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=mariadb

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.103.111.200

IPs: 10.103.111.200

Port: <unset> 3306/TCP

TargetPort: 3306/TCP

Endpoints: 172.17.0.12:3306,172.17.0.4:3306

Session Affinity: None

Events: <none>

Note that Endpoints are related to Pod IPs. TargetPort relates to the container port the Service needs to reach, where the Port relates to the Service port itself. It is recommended that both ports should be equal.

Now we can use service name, instead of Pod names, for the kubectl exec to test mariadb client

$ kubectl exec svc/mariadb-deployment -- mariadb -uroot -psecret -e "select current_user()"

current_user()

root@localhostTo delete the service, run the delete command on kubectl

$ kubectl delete svc mariadb-deployment

service "mariadb-deployment" deleted

Alternatively, we can use a YAML file to create the Service. Let’s create the Service mariadb-service.yaml. The file can be found on GitHub mariadb.org-tools too.

apiVersion: v1

kind: Service

metadata:

name: mariadb-internal-service

spec:

selector:

app: mariadb

ports:

- protocol: TCP

port: 3306

targetPort: 3306

Note that the Services match a set of Pods using labels and selectors which are key/value pairs attached to objects at creation time or later on. To create the service, run the kubectl apply command and display the results.

$ kubectl apply -f mariadb-service.yaml

service/mariadb-internal-service created

$ kubectl get svc mariadb-internal-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mariadb-internal-service ClusterIP 10.100.230.139 <none> 3306/TCP 4s

For homework try to run kubectl edit svc mariadb-internal-service and change the type to NodePort. After that, you should get the port that is reachable outside of a cluster and can be obtained using Node IP address (which can be got from minikube ip) followed by the obtained Node port. Try to get binary output using curl $(minikube ip):node_port.

Create the frontend application

As a frontend application, we are going to use phpmyadmin (from bitnami), and we will start it in a new container of the same Deployment we created in the previous blog. So we are going to have a single deployment with 2 Pods and have the existing Service created in the previous step.

Before we proceed, we will need to create a ConfigMap resource. ConfigMap is used to store non-confidential key/value pairs used as environment variables, command-line arguments, or as configuration files in a volume. They allow one to create configurations other K8s objects can use. In this example we are going to use ConfigMap to store a MariaDB Service and we are going to use that data as a value for DATABASE_HOST needed as an environment variable for the phpmyadmin application.

ConfigMap can be created in a file. Let’s call it mariadb-configmap.yaml (it can be found at GitHub). It looks as follows:

apiVersion: v1

kind: ConfigMap

metadata:

name: mariadb-configmap

data:

database_url: mariadb-internal-service

The Data field has a key database-url whose value is a service name that references the MariaDB database created in the previous step.

To create and display the ConfigMap resource, run:

$ kubectl apply -f mariadb-configmap.yaml

configmap/mariadb-configmap created

$ kubectl get configmap mariadb-configmap

NAME DATA AGE

mariadb-configmap 1 41s

Try to run kubectl describe configmap mariadb-configmap and you will see the Data field with key/values and an optional BinaryData field.

From here we can use the phpmyadmin application as a container in the existing Pod, or create its own related Deployment. It is recommended to create a specific Deployment for the phpmyadmin application (do it for homework), but I decided to use the first method here (to have 1 Deployment with 2 containers) to show how multiple containers can be used.

Let’s update mariadb-deployment.yaml and call it mariadb-deployment-with-phpmyadmin.yaml, (code can be found at GitHub) that will have 2 containers. It should look like this:

apiVersion: apps/v1

kind: Deployment # what to create?

metadata:

name: mariadb-deployment

spec: # specification for deployment resource

replicas: 2 # how many replicas of pods we want to create

selector:

matchLabels:

app: mariadb

template: # blueprint for pods

metadata:

labels:

app: mariadb # service will look for this label

spec: # specification for Pods

containers:

- name: mariadb

image: mariadb

ports:

- containerPort: 3306

env:

- name: MARIADB_ROOT_PASSWORD

value: secret

- name: phpmyadmin

image: bitnami/phpmyadmin:latest

ports:

- containerPort: 8080

env:

- name: DATABASE_HOST

valueFrom:

configMapKeyRef:

name: mariadb-configmap

key: database_url

Note that DATABASE_HOST has a value referenced from ConfigMap by the name and key we created before. Let’s start this deployment by applying the file and observe the results of the created deployment and Pods.

$ kubectl apply -f mariadb-deployment-with-phpmyadmin.yaml

deployment.apps/mariadb-deployment created

$ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

mariadb-deployment 2/2 2 2 15s

# Check Pods

$ kubectl get pods -l app=mariadb

NAME READY STATUS RESTARTS AGE

mariadb-deployment-6d654f6fdc-8hmcq 2/2 Running 0 3m21s

mariadb-deployment-6d654f6fdc-bx448 2/2 Running 0 3m21s

# Get information about deployment

$ kubectl describe deploy mariadb-deployment

Since we wanted to have 2 replicas, we ended with 2 Pods where each Pod is running 2 containers. To get information about each container, one can run kubectl log <pod-hash> <container-name> :

# Logs for MariaDB container

$ kubectl logs mariadb-deployment-6d654f6fdc-8hmcq mariadb

...

2022-03-18 13:52:35 0 [Note] mariadbd: ready for connections.

Version: '10.7.3-MariaDB-1:10.7.3+maria~focal' socket: '/run/mysqld/mysqld.sock' port: 3306 mariadb.org binary distribution

# Logs for phpmyadmin container

$ kubectl logs mariadb-deployment-6d654f6fdc-8hmcq phpmyadmin

...

phpmyadmin 13:52:30.20 INFO ==> Enabling web server application configuration for phpMyAdmin

phpmyadmin 13:52:30.29 INFO ==> ** phpMyAdmin setup finished! **

phpmyadmin 13:52:30.30 INFO ==> ** Starting Apache **

Through kubectl exec we can check working of the clients

$ kubectl exec mariadb-deployment-6d654f6fdc-8hmcq mariadb -- mariadb -uroot -panel -e "select version()"

Defaulted container "mariadb" out of: mariadb, phpmyadmin

version()

10.7.3-MariaDB-1:10.7.3+maria~focal

To test the frontend, we have to create the Service to expose the port to the phpmyadmin application. Let’s do it through the kubectl expose command we learned in the previous blog and display the result.

Note the type used is LoadBalancer since we have 2 Pods. Minikube supports this type. When describing the service, one can find the endpoints whose IPs are the Pod IPs with the port of the phpmyadmin container.

Note -o wide to output in wide format.

$ kubectl expose deploy/mariadb-deployment --port 8080 --target-port 8080 --type LoadBalancer --name=phpmyadmin-service

service/phpmyadmin-service exposed

$ kubectl get svc phpmyadmin-service -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

phpmyadmin-service LoadBalancer 10.97.244.156 <pending> 8080:31789/TCP 7m7s app=mariadb

$ kubectl describe svc phpmyadmin-service

Name: phpmyadmin-service

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=mariadb

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.97.244.156

IPs: 10.97.244.156

Port: <unset> 8080/TCP

TargetPort: 8080/TCP

NodePort: <unset> 31789/TCP

Endpoints: 172.17.0.4:8080,172.17.0.9:8080

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

Before testing phpmyadmin, let’s first create a sample database called “k8s”:

$ kubectl exec mariadb-deployment-6d654f6fdc-8hmcq mariadb -- mariadb -uroot -panel -e "create database if not exists k8s; show databases;"

Defaulted container "mariadb" out of: mariadb, phpmyadmin

Database

information_schema

k8s

mysql

performance_schema

sys

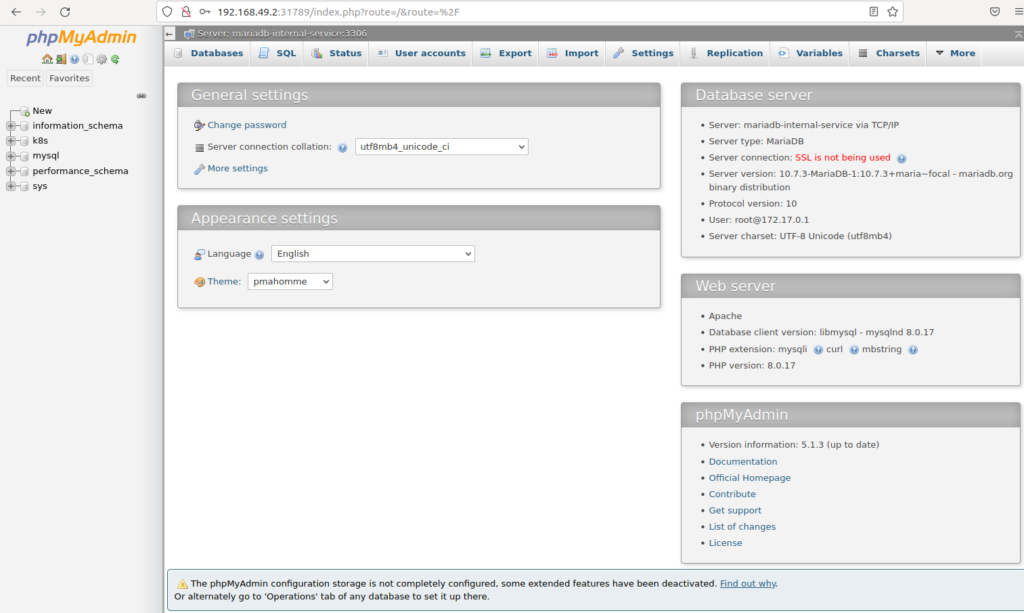

To test the phpmyadmin-service, run curl $(minikube ip):31789, or alternatively open the service in the browser:

$ minikube service phpmyadmin-service

|-----------|--------------------|-------------|---------------------------|

| NAMESPACE | NAME | TARGET PORT | URL |

|-----------|--------------------|-------------|---------------------------|

| default | phpmyadmin-service | 8080 | http://192.168.49.2:31789 |

|-----------|--------------------|-------------|---------------------------|

Minikube will open the browser with the GUI to connect to MariaDB server through the internal MariaDB service using K8s (note “k8s” database created before).

At the end, we could create a single file containing all K8s resources we needed (ConfigMap, 2 Services and Deployment). This is done here at GitHub.

Conclusion and future work

In this blog we have learned about new K8s resources, namely Service and ConfigMaps, their properties and the way how to use them to interact between containers. For some good homework, extend this tutorial to create a frontend Deployment, instead of frontend container, to interact between the backend Deployment.

In the next blog, we are going to learn about Secrets, a K8s object that hides confidential data from application code and Statefulset, a resource in K8s that helps to preserve data consistency in K8s clusters.

You are welcome to chat about it on Zulip.

Read more

- Start MariaDB in K8s

- MariaDB & K8s: Communication between containers/Deployments

- MariaDB & K8s: Create a Secret and use it in MariaDB deployment

- MariaDB & K8s: Deploy MariaDB and WordPress using Persistent Volumes

- Create statefulset MariaDB application in K8s

- MariaDB replication using containers

- MariaDB & K8s: How to replicate MariaDB in K8s