Category Archives: LLM

Now is a good time to upgrade to the freshest MariaDB Server. MariaDB 11.8 is the yearly long term support release for 2025. It’s the first LTS with support for MariaDB Vector, and it includes a number of other updates based on user requests. You can seamlessly upgrade to MariaDB 11.8 from MariaDB 11.4 (the previous LTS) or any older release, back to MariaDB Server 10.0 or earlier, including most versions of MySQL Server.

MariaDB 11.8 LTS includes everything added since 11.4, incorporating changes from 11.5, 11.6, 11.7, as well as new features not released before.

…

We recently announced the winners of the MariaDB AI RAG hackathon that we organized together with the Helsinki Python meetup group. Let’s deep dive into the integration track winner. David Ramos chose to contribute a MariaDB integration for MCP Server. MariaDB plc was impressed by the results and has picked it up for further development with more features.

David, tell us about yourself and why you decided to join the Hackathon?

I am an aspiring Data Scientist from Colombia. I studied Physics in college, but by the time I graduated, I realized what I enjoyed the most was working with data and programming, so I decided to make the shift to Data Science.

…

I think you’ve come to expect that every collaboration with Intel leads to meaningful, well-executed projects that bring great value to the community. This time, we’re spreading our wings towards empowering enterprises to deploy AI solutions, and we’re excited to show how MariaDB Vector fits into the Open Platform for Enterprise AI (OPEA).

🤖 Open Platform for Enterprise AI

The Open Platform for Enterprise AI, or simply OPEA, is a new sandbox-level project within the LF AI & Data Foundation where Intel plays a pivotal role in its active development and once again it shows its strong commitment to open-source innovation.

…

We at MariaDB Foundation are thrilled to see Amazon at the forefront of applying artificial intelligence to open source contributions — with MariaDB as their pilot.

At the May 6th MariaDB meetup in Bremen, Bardia Hassanzadeh, PhD, presented the Upstream Pilot tool: an AI-based assistant designed to help developers identify, analyze, and resolve open issues in MariaDB more effectively.

This initiative is the most promising we’ve seen for improving the open source contribution process. Bardia and Hugo Wen of Amazon gave us a preview last week and we were already very impressed.

…

Continue reading “Amazon’s AI-agents for MariaDB Contributions”

The ideation phase of the MariaDB AI RAG Hackathon is nearing its deadline on Monday (by end of March).

We have several cool submissions so far. One is about combining the Knowledge Graph and LLMs, using MariaDB Vector Nearest Neighbour Search. Another one is about an “advanced context diff”, identifying the differences between two text corpuses based not on their literal wording, but on their content.

All of the current submissions are in the Innovation track. We would particularly like submissions in the Integration track – to add MariaDB to frameworks such as these, or other apps.

…

We are excited to announce a hackathon with MariaDB Vector and Python. Since we are reaching outside our bubble, let’s start from the beginning:

MariaDB, the open-source database powers the world’s most demanding applications, from Wikipedia to global financial institutions. Now MariaDB Vector is bringing AI-ready vector search natively into the open-source database world. MySQL users ahoy:

Our hackathon is your chance to explore AI possibilities with MariaDB Vector and Python. Whether you’re a developer, data scientist, or AI enthusiast, MariaDB Foundation invites you to build innovative AI applications, compete for prizes, and showcase your work.

…

Continue reading “Join our AI Hackathon with MariaDB Vector”

We’re no mind readers, so from time to time, we like to do polls. Polls are quantitative in nature, so coming up with the right question is not enough – we need to do a bit of mind reading in coming up with the alternatives.

Quick development of text based RAG apps

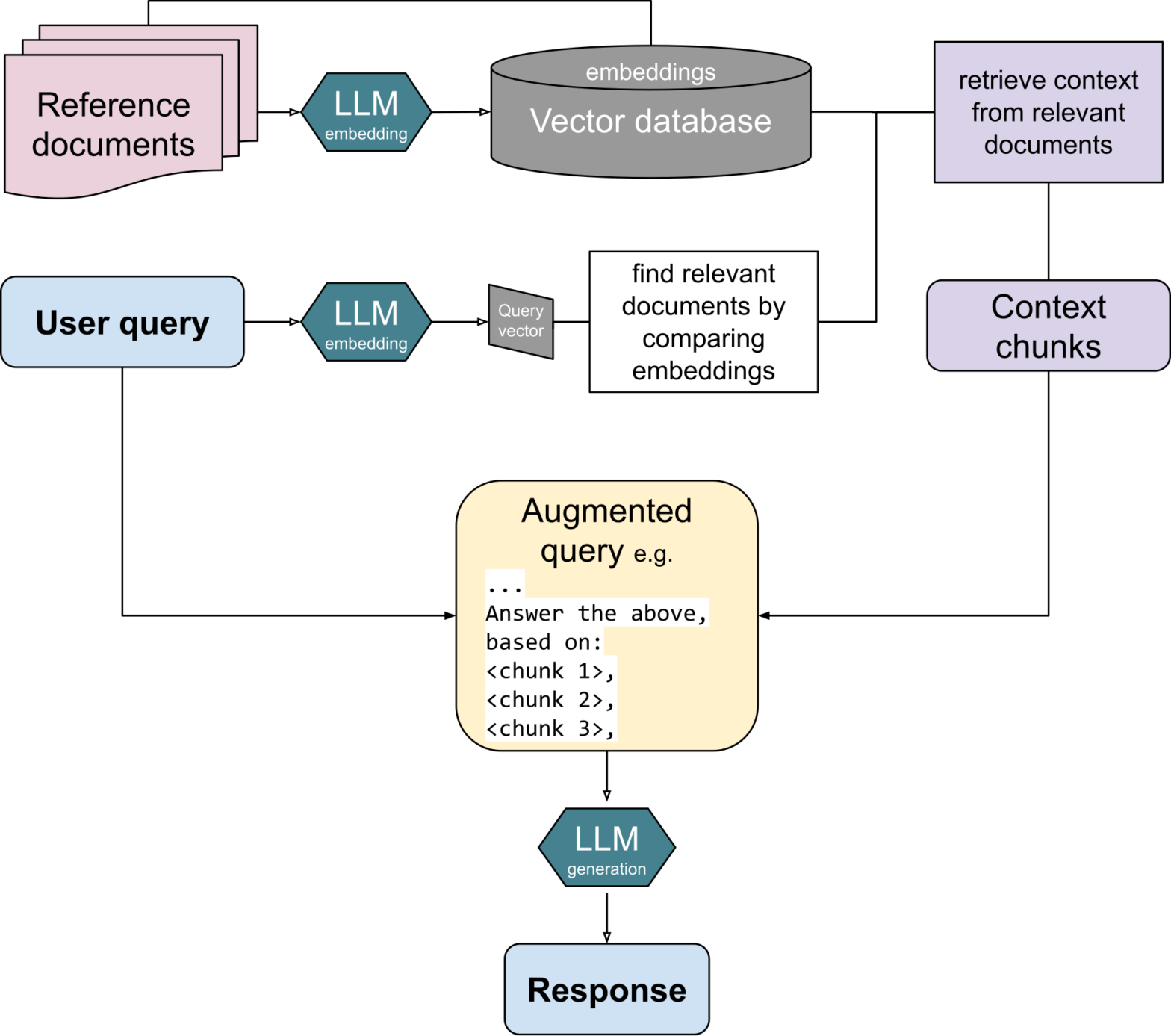

Our hypothesis was that RAG is the cool thing to do with vector based databases, and specifically text based RAG. The conference talks we’ve given on MariaDB Vector (such as at the 24th SFSCON in Bozen, Südtirol, Italy on 8 Nov 2024) have stressed the value of easily being able to develop AI applications that answer user prompts based on knowledge in a specific text mass, not on the overall training data of an LLM.

…

Continue reading “What do you expect from vector storage in databases?”

The day has come that you have been waiting for since the ChatGPT hype began: You can now build creative AI apps using your own data in MariaDB Server! By creating embeddings of your own data and storing them in your own MariaDB Server, you can develop RAG solutions where LLMs can efficiently execute prompts based on your own specific data as context.

Why RAG?

Retrieval-Augmented Generation (RAG) creates more accurate, fact-based GenAI answers based on data of your own choice, such as your own manuals, articles or other text corpses. RAG answers are more accurate and fact-based than general Large Language Models (LLM) without having to train or fine-tune a model.

…

Continue reading “Try RAG with MariaDB Vector on your own MariaDB data!”